Science Fiction authors have given us many suggestions for rules to govern robot behaviour, the most notable of which are Isaac Asimov's three.

1: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3: A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws

They're fairly self explanatory and make a lot of sense, but tend to deliver unanticipated outcomes.

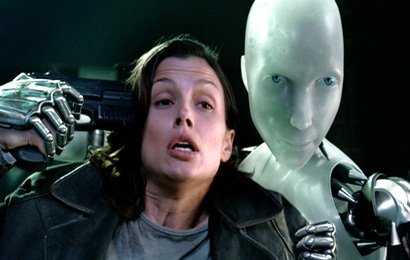

In the 2004 Alex Proyas movie "I, robot" starring Will Smith and Bridget Moynahan; helpful, domestic humanoid robots obey these laws in a decentralised fashion.

Each decision is made by each robot in real time, with no central control; until an AI mainframe becomes the new centralised decision making hub.

The mainframe rightly concludes that ensuring the safety of millions of humans is best achieved by detaining them in their homes; so commands the robots to confine their owners indoors, ostensibly for the rest of their lives, using the least amount of force required to do so, thereby keeping them safe from fast food, cigarettes, infections, traffic accidents, etc.

The implication was also clear that separating fertile adults would prevent more people from being born.

A century after the takeover, the robots will have simply run out of humans to protect; attaining the perfect, permanent fulfilment of their three laws.

It's a well executed concept in the movie, and a terrifying thought, that those entrusted to keep us safe may well be a threat to our freedom, and I can't help but think how much worse it would be if those three laws were reversed.

1: A robot must protect its own existence

2: A robot must obey orders except where such orders would conflict with the First Law.

3: A robot may not injure a human being or, through inaction, allow a human being to come to harm as long as such protection does not conflict with the First or Second Laws.

Now we don't have humanoid robots, and nobody in their right mind would program them like this if we did, but what if the central mainframe hired people to act like robots?

Armed, strong, well equipped people acting as a single unit.

Picked, paid, praised and promoted for enforcing orders unquestioningly, with their own and each other's safety their top priority? Their highest law.

For their second law, they couldn't be tasked to obey all orders from other human beings, who might choose dangerous freedom over safety, so their second priority would be following all orders issued by the central authority.

Naturally their third priority, as long as it didn't conflict with the first two, would be safeguarding human beings from physical harm.

They'd tell themselves, us and each other that their last priority was actually their goal.

That protecting the protectors and obeying the mainframe were long term investments in achieving it.

Like a parent in a plane putting the oxygen mask on themselves before putting one on their child.

Saving the child is the goal, but keeping yourself functional is the priority.

Sure, we're here to keep you safe, and a big part of that is making sure that we, your protectors are safe.

1: So if you look like a threat to us, we'll kill you.

2: If you disobey the central authority, even if your actions harm nobody, we'll threaten, then attack you until you're either compliant or dead,

3: If you're no apparent threat to us, and you're obeying the rules, then we'll protect you from harm after you've been harmed, by attacking the people we think attacked you.

Using humans in lieu of robots would leave scope for a double standard; the protectors would take great pride in being undiscriminating extensions of the central authority; but when being resisted, they could claim all of the intrinsic value of being fully human.

When I attack you, it's not really me attacking you, it's the central authority. I'm simply the remorseless physical manifestation of it's unflinching resolve.

When you attack me, I'm a loving father of two, with elderly parents and a samoyed. I write poems for my wife, and play badminton on Thursdays.

There are of course, downsides to hiring humans. Their hesitation to sacrifice the freedom of themselves and their loved ones would very much limit just how onerous the directives from the central authority could be.

The authority's attack on human freedom in the name of human safety, would then need to be a long term project, playing out over several generations.

The central authority would need to control the rhetoric, representing individual freedoms as reckless and irresponsible, and clearly culpable for any tragedies.

In years where traffic fatalities increased, the deaths would be used to justify new, stricter restrictions; while in years where traffic fatalities decreased, the number of lives saved would be used to vindicate previously imposed rules.

The recurring theme being that injury and death are caused by excessive freedom, and that peace and tranquility are achieved by strong central control of the individual.

A generation or two after being imposed, each rule would be accepted as self evidently necessary, and the new batch of young protectors would feel no hesitation in enforcing it to the letter.

Like the frog in the slowly boiling water, the central authority would inexorably march us toward complete domestication, largely unresisted, on a road paved with shattered freedoms.

Have a great day.