For a while now, I've been really interested in strong and super intelligence along with consciousness itself. My favorite movies explore these concepts in detail and include:

- The Matrix series

- 2001: A Space Odyssey

- Inception

- Ex Machina

- The Thirteenth Floor

- Tron

- Existenz

- The Lawnmower Man

- I, Robot

- A.I.

- Transcendence

- Bicentennial Man

- Dark City

I also love fictional books which explore these topics like:

- Avogadro Corp: The Singularity Is Closer Than It Appears

- The Daemon

- Freedom tm

- Influx

- Kill Decision

- Ready Player One

- Snow Crash

- Player of Games

- The Diamond Age

(If you look closely you'll see I've listed every book from Daniel Suarez. I love that author's work. If you have additional books or movies to add to this list, please leave a comment below).

I'm also finishing up Superintelligence: Paths, Dangers, Strategies by Nick Bostrom (which has been amazing).

As a computer programmer, I'm deeply involved in the tech culture and get to see new things happening a little earlier than most. I built my first websites in 1996 and even then, I was convinced the Internet was going to change the world. I've felt the same way about bitcoin, Voluntaryism, and Artificial Intelligence. To some degree, I feel the same way about Steem, but we're still in the early days so I'm not quite ready to make that call.

Exploring the origins of our morality has been important to me in many ways because of my fascination with the future and how our understanding of morality will shape it. We're all familiar with the dystopian fictions where the robots take over the world, but how many of us explore the real-world influences which could bring about a hell or a heaven? What things can we do now (if anything) to bring about an optimal future if computers can do everything human brains can but orders of magnitude faster?

Smarter people than I have been talking about this stuff for decades. I'm just happy to be along for the ride. I started a Pindex (think Pinterest for people who like educational material) with lectures and content and want to share it with you. It's called...

The Morality of Artificial Intelligence

The board will stay updated with interesting content like this nugget from Nick Bostrom's paper: Future Progress in Artificial Intelligence: A Survey of Expert Opinion:

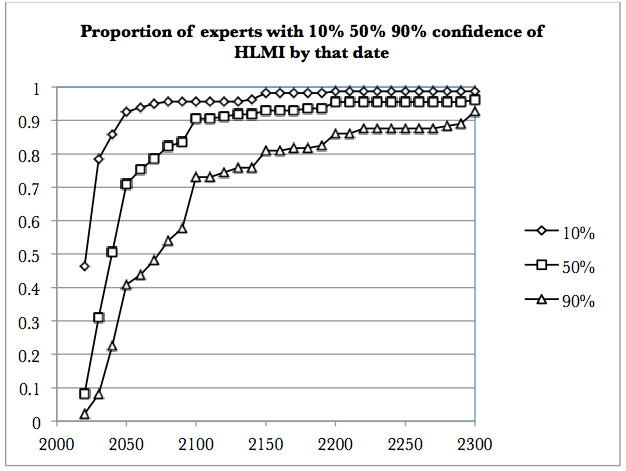

"...the results reveal a view among experts that AI systems will probably (over 50%) reach overall human ability by 2040-50, and very likely (with 90% probability) by 2075. From reaching human ability, it will move on to superintelligence in 2 years (10%) to 30 years (75%) thereafter. The experts say the probability is 31% that this development turns out to be 'bad' or 'extremely bad' for humanity."

I hope you'll join me on the Morality of Artificial Intelligence Pindex board and suggest new content as well. We as a species should work to figure this out. Most experts think a super intelligent future is inevitable and will happen within our lifetime. The question we should be asking ourselves is what morality should super intelligent beings have and why? These aren't easy questions, and it looks like we have less than 50 years to figure them out.