Last month I posted the article, My bot is a better voter than I am. In that post, I compared the performance of my manual voting (@remlaps) with my automated voting (@remlaps1). With the implementation of hardfork 18 and the "whale experiment," I thought it would be useful to see where things stand now, but first some background.

Background

At this point, my bot is still very rudimentary. Getting started was made easy by piston-lib, from @xeroc, but I've had time to imagine many more possibilities than I've had time to actually code. If I ever get it to a level of quality that I consider to be "presentable," I intend to open source it, but I only get to work on it in small chunks of time, so I don't know if I'll ever get it to a suitable level of usability for public consumption. If you want to automate your own voting, but don't want to roll your own bot, I recommend using steemvoter (from @steemvoter), FOSSbot (from @personz), or AutoSteem (from @unipsycho) for your own bot adventures.

My primary goal with this bot has been to provide what little support I can for price of steem by automating votes for the types of content that I would vote for manually. To do this, I primarily use a combination of whitelists and blacklists of authors and categories, along with some randomness and a few estimates for post quality. Over the course of about 3 months, my bot has received 39 steem in curation rewards, which means it has provided somewhere around 120 steem in direct support to the content that it has supported, in addition to any feedback effect from bringing content to the attention of other human and automated voters.

Of course, once it decides what to vote for, the bot's secondary goal is to raise revenue. I make use of vote counts and timing to try to optimize my curation rewards on each post.

I also spot check the bot's voting, usually daily, and make rule adjustments when I find it voting for things that I might not have voted for. My favorite occurrence (which happens with surprising frequency) is when the bot's log gives me posts that I can vote for manually.

What is an upvote

Some of the voters here seem to take pride in the exclusivity of the content that they vote for. That's not me. My up-vote is worth a fraction of a cent. It's not supposed to be some monumental decision. So I am very liberal with my up-votes.

If I vote for something manually, it means that I liked something about an article. It does not mean that I liked everything. As a general rule, if an article (or comment) manages to engage my attention for as long as it takes to read or view it (or at least long enough to get the "main idea"), that article gets my up-vote. The up-vote decidedly does not mean that I liked or agreed with the viewpoint, but I go with the assumption that if something held my attention, then it would hold someone else's attention too, and that's good for the price of steem.

Since I have no way to measure attention with the bot, I try to take careful notice of the choices I'm making when voting manually, and then codify bot rules that emulate those choices.

Now on to the data:

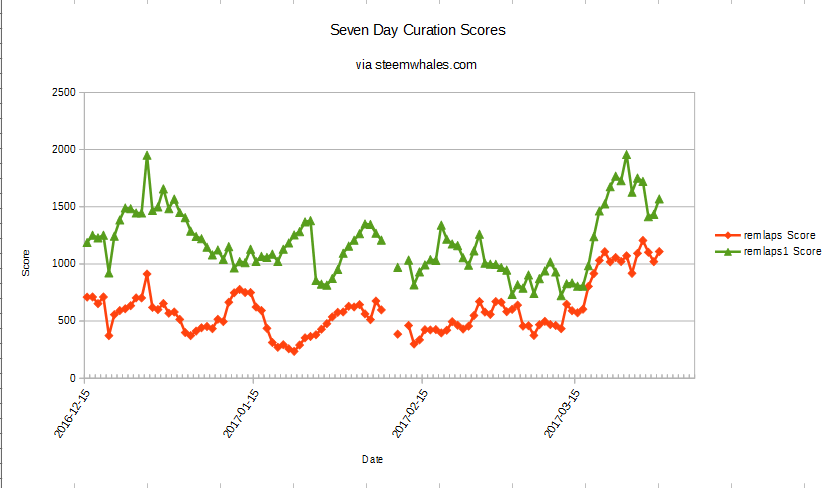

Scores taken from steemwhales.com

(higher is better)

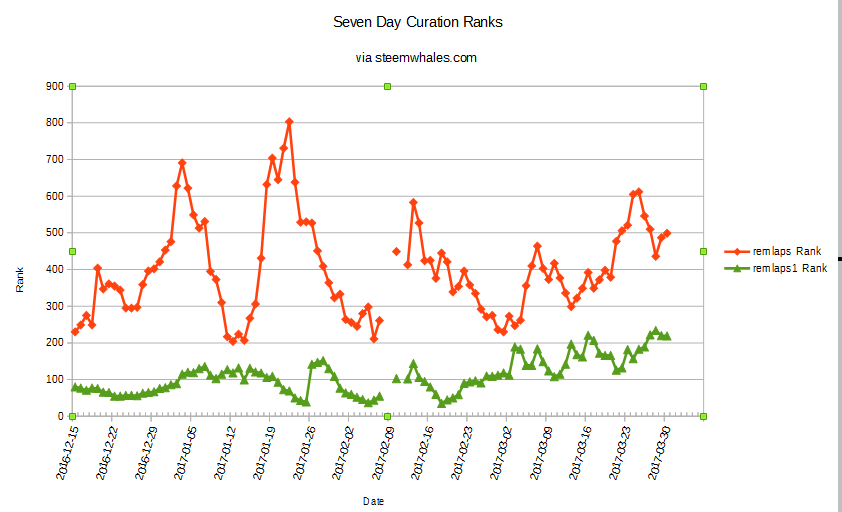

Ranks taken from steemwhales.com

(lower is better)

Notes on scores and ranks

- As with my previous post, bot-me is still a better voter than real-me. However, the whale experiment is bringing real-me closer. In fact, the whale experiment is the first time that real-me's best scores were better than bot-me's worst scores.

- I think it's fascinating that the bot's scores improved but its rank fell during the whale experiment. To me, that implies that the bot is benefitting from the whale experiment, but a number of other voters are benefitting more.

- As with my last post, the fact that real-me and bot-me votes are tracking together suggests that the bot is doing a reasonably good job at approximating my preferences.

- In early March, there is about a week where the two paths seem to diverge. This was an artefact of the way that steemwhales calculates the scores. I powered down some steem from my manual account and used it to power-up the bot account. This artificially improved my manual scores and reduced my automated scores.

- Like many other bots have reported, my bot lost a couple hours of voting last week and a couple hours this week due to changes in the websocket protocols.

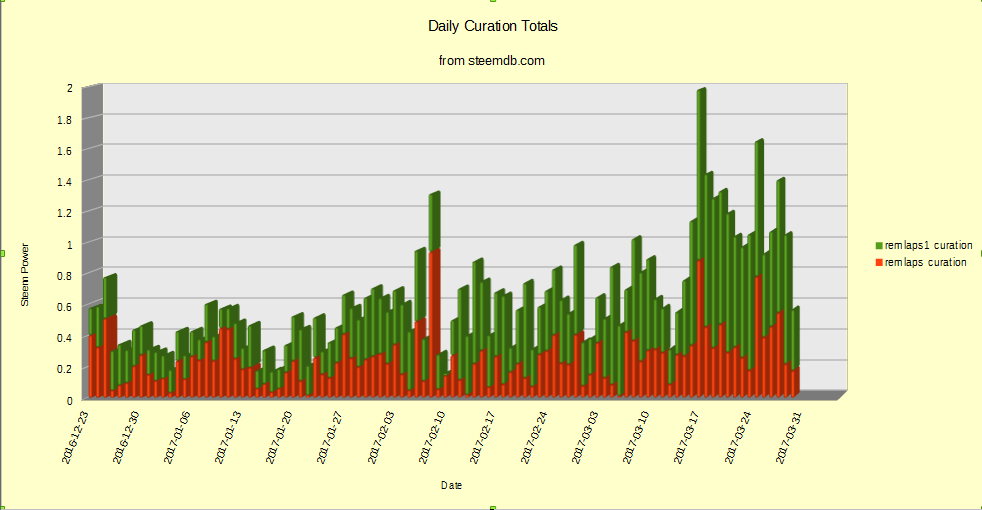

Daily Curation totals taken from steemdb.com

I don't think there's much to say about the curation totals, except that I'm very curious to see what happens next week when the post hf18 payouts start.

Thoughts on the whale experiment

I remain neutral on the experiment. Since it's not changing the total payouts, by definition it is having 0 net impact. However, it's interesting to examine the winners and losers. Reportedly, author rewards have been more evenly distributed. Some people see this as an unambiguous good thing. I don't. I think it's a data point that doesn't really tell us anything. Personally, I have seen 4 direct effects that appear to arise from the whale experiment:

- My curation totals went up.

- My author rewards may have gone down, and so have my son's (although I haven't been posting much).

- My vote became worth a penny (nullified by hf18)

- My son got really (really!) shafted by the combined impact of the whale experiment and hf18 on this post.

- Before the whale experiment started, the post won the steemvoter guild vote of the day for about $8 in its 30 day payout window.

- After the whale experiment started, whale flagging drove down curation rewards on other posts in the 30 day pool, which drove his payout up near $100.

- The whales started flagging steemvoter and retroactively flagged his pre-experiment post back down to about $7.

- HF18 drove it down even further, to $0.09. Which will payout tomorrow.

- I frequently remind him that, "It's a lottery," but anyone would be disappointed after a payout roller-coaster like that.

As a result of the experiment and the change in rewards, my advice to my 15 year old son has been that he needs to stop working on articles that take 2, 3, or 4 hours to complete and switch to lower quality content that can be shorter and more frequent. I'm sure I'm not the only one to reach this conclusion. If this is the goal that the whales are trying to achieve, then we can say they've been successful. I guess the questions are: (i) Does steemit need more content at lower quality or less content at higher qualty? -and- (ii) Who decides?

Morally, I have a problem with one group of whales actively working to silence the others, but there is also a problem of disproportionate influence that results from the N2 influence curve, so I sort of consider those to be offsetting penalties.

Update: One last observation about the whale experiment that I forgot to include. I ignore the trending page, but my son pointed this out to me repeatedly during the last couple weeks. The trending page is now thoroughly dominated by posts about steem and steemit. I consider this to be a negative consequence. For example: