Not to be outdone by the illustrious @GMuxx, I decided to post about our Transatlantic Rendering Relay, as well. I’m pretty excited about it. I have a background in basic 3D modeling and animation, but Iray technology is all new to me. The degree of photorealism achievable with it is staggering.

In 2010, Sims 2 was all the rage and I had every expansion pack. I’d taken my favorite Sims to platinum status and was starting to get bored when I discovered machinima. I made all kinds of silly little movies with my Sims, at first using standard game content and animations. I blogged about my experiences here. Eventually, I learned how to create custom content and poses, even how to get those gibberish-speaking Sims to lip-sync along with real words. Sort of. LOL And then … (queue the Hallelujah Chorus) … I discovered iClone.

It was rough going at first. The technology back then was nothing compared to the technology available today. Lighting was sketchy. Animations were stiff and mechanical. Visime (lip sync) was more “off” than “on.” Yet I recognized the potential and knew the tools to produce this type of media would only improve.

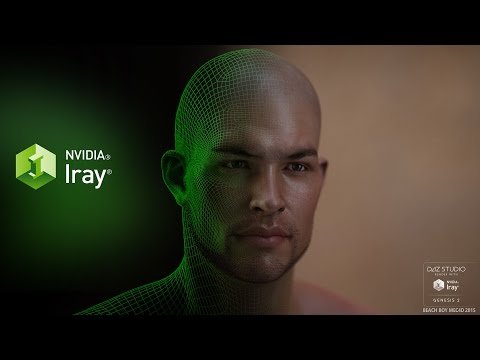

And improve they did. I look back on my old machinima films and know they were cutting edge at the time, but when stacked next to the most basic Iray renders of 2018—WOW. Software and game developers have evidently been working hard. They’ve put tools into the hands of regular users like me that we could only dream about even a few short years ago. Take a look at the short, three-minute sampling of NVIDIA Iray renders in the video embedded below. None of those people are real. Those are all C-gen, or computer-generated characters. They are all wireframe, fully rigged and animatable figures. Those aren’t photos or even skilled airbrushing. Even as immersed as I have been lately in the world of animation and rendering, I still stand amazed at the quality of this work.

One thing I wasn’t prepared for after my extended hiatus from animation is the enormous amount of computing resources required for photorealism. Years ago, I was quite proud of my big Asus gaming machine that could render globally-lit animation frames almost as fast as they could play in the timeline. It finally crashed last year before I ever had a chance to cripple it under the load of an Iray render. I suppose it would not have performed nearly as well with physically-based render technology as it did with the simpler scene lighting of 2010. I learned recently that, in order to work in real-time with complex Iray renders, I’d need a minimum of two Titan X SCs, or their current counterparts, dual Geforce 1080s. The price of SBD and Steem’s gonna have to rise a lot higher before I can afford that kind of setup. I currently run a single Geforce 1050 and it takes more than an hour to render a single frame of the animation we’ve put together for Steemhouse. Without the cooperative render relay that’s happening as a result of GMuxx’s post, it would have taken weeks to render that 500-frame project.

Since the original Transatlantic Render Relay post, we received a couple offers of help from people with powerful machines, and we will take them up on it. After the rendering is finished, there’s post-processing. It will take a bit of time as well. Is there a better way? Certainly there are easier, faster ways. But the visual impact of the animation we’re producing takes top priority. After this project, we’ll look into different solutions for the future.

So here’s the question: did I achieve photorealism with this project? No. I did not. Not in my opinion, anyway. It still looks more like lush, detailed animation than an image come to life. I’m quite happy with it, though. It serves our purpose well. Since finishing the animation phase of the project, I’ve continued to work on my skills with photorealism. I’ve learned that the two biggest factors that determine whether an object can fool the human eye are materials and lighting. Get those right, and the modeling and post-processing phases will serve only to enhance.

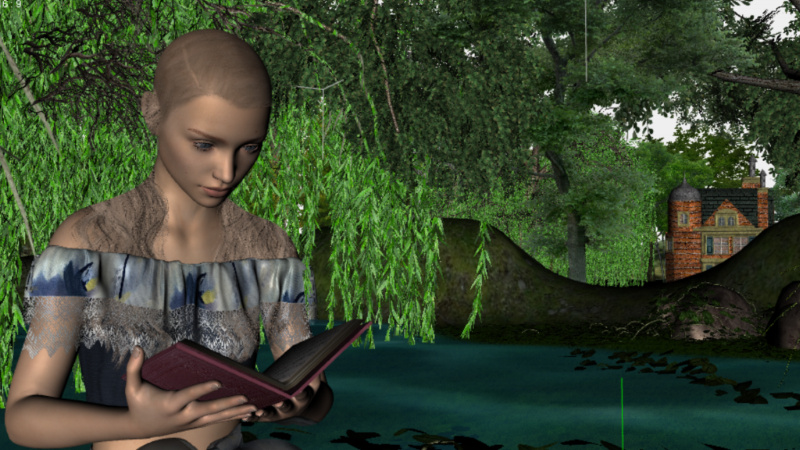

An example of what I mean can be seen in the following image. This still shot of a custom character is visually impressive. But you can see instantly that it’s a 3D model. Something about the lighting and textures is just “off.”

The answer in this case is in the materials. The character’s “skin” is very well done, very detailed. But it doesn’t have the shader properties specific to Iray. Shaders add another level of information the processor has to computer. They instruct the renderer about how to scatter light on certain surfaces, how much reflective property those surfaces have, and other details that make up the PBR (physically-based rendering) profile. The catch is that Iray materials don’t render properly in other engines. I’ll show you what I mean.

Have a look at this image of the same character in a texture-based render environment (one that is not Iray or any form of PBR.)

Now have a look at the same character after Iray shaders have been added.

Looks pretty funky, right? Well, have a look at the same image when the Iray engine is switched on and PBR calculations are applied.

Makes a pretty big difference, doesn’t it?

This is the same principle I worked with for the entire process of creating the Steemhouse animation. As I said earlier, I never achieved true photorealism with it, but I’m happy with where it stands. We’ll use the same model in future projects and, without changing a thing about her materials, may be able to come close enough to photorealism to satisfy me. We’ll see how that goes.

In the meanwhile, I have no complaints about the lush backdrop and the rich, deep colors of the current project. Below is the first frame of the animation rendered with Iray. The forest set, the pond, and the character herself are items I downloaded because of their built-in Iray capabilities. The building in the background and the book are items I modeled myself from their basic primitives. Although they respond favorably to Iray calculations they don’t have any shaders or special properties that make them behave in specific ways under PBR conditions. Why don’t they? Because I haven’t a clue how to incorporate those features…yet. But I am learning. The other factor that detracts from photorealistic accuracy of this project is the lighting. Since I don’t have dual Geforce 1080s, I’m unable to work with Iray rendering in real time, therefore I can’t easily see how the light interacts with the set and props. And with the render of each frame taking more than an hour—well, I’m sure you can see the issues that creates.

I had to content myself with working in a texture-based environment, which shows the props well but doesn’t display the Iray materials on the character properly at all. In the following image of the same frame shown above, I have the Iray engine turned off. You can see the difference. I have enough computing power to navigate the viewport in the texture-based environment, but the minute I switch to the Iray engine, processing grinds to a near halt. It’s impossible for me to fine-tune the lighting and PBR settings under these conditions. But one day I hope to own the equipment to do this the way Iray meant for it to be done.

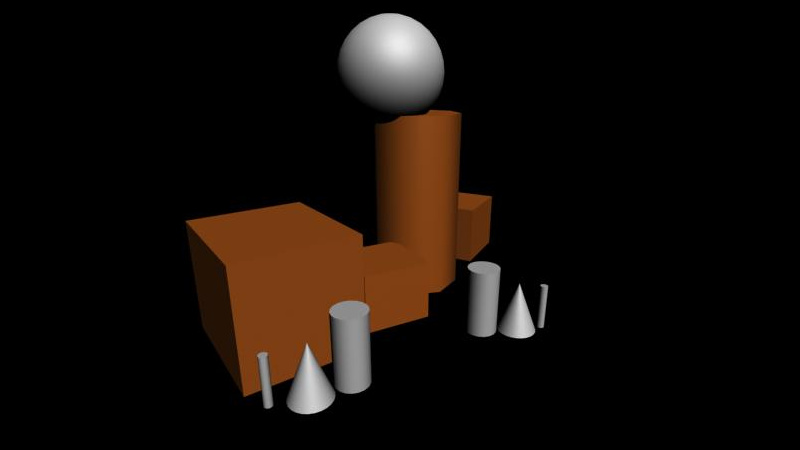

Iray material aside, I’m still pleased with the props I made for this project. Here are the primitives I started with to make the Steemhouse.

Using a photo of a real building, I modeled the basic architecture of the prop.

Then I added the windows and other relief details that make the model really “pop.”

Finally I added the texture and opacity maps, and voila! There we have it.

The addition of shaders to the metal dome and steam whistles would have really made this image leap off the screen. But I had to keep in mind my limited processing abilities and how heavy a load this project has already placed on my ROG Strix laptop. I’m going to convert the above image to a flat, monochrome vector image for the logo anyway. If I use it again for another animation, I can add the shaders and displacement maps at any point along the way.

Between the three (possibly four) of us rendering this project, we’ll keep you posted on our progress. So far, so good. We’re reaching the halfway point already, and it’s only been a few days. This is good news! Stay tuned for more updates.