A language character counting tool

According to the latest Utopian rule, any contribution in 'translation' category must translate over a number of words. If the translator works in Crowdin, it is easy to have the word count number. However, if the translator works on Github project directly, it is a bit difficult to count the characters in a particular language as for translation work, ususlly multiple language characters exist in the same file. I have implemented a tool to do this job. It is written in Python and has been tested on Ubuntu 16.

Image source: pixabay.com

Implementation

The basic idea is to analysis the text and check each character against the unicode values for each language. In principle, the script works with any language - just edit the configuration file. Also, to make it handy for both translators and moderators, the tool support counting for both individual files and all files contained in a folder.

Test

I have written a couple of test to validate if the tool works and all tests pass.

$ python test.py

..

----------------------------------------------------------------------

Ran 2 tests in 0.002s

OK

How to use

First, clone this repository to your PC.

Then modify the first line of wordcounter.py to get your python folder right:

#!/home/yuxi/environments/myenv/bin/python

To count individual file, run:

/YOUR_FOLDER/wordcounter.py FILENAME locale

To count all files within a folder, run:

/YOUR_FOLDER/wordcounter.py FOLDER locale

For example, if samples/1.yml has the following content:

zh-CN:

File: 文件

Edit:编辑

Help:帮助

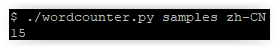

Then run command:

./wordcounter.py samples/1.yml zh-CN

It returns 6

To run the following command to count Chinese characters within a folder:

./wordcounter.py samples/ zh-CN

It returns:

The tool is available here: https://github.com/yuxir/wordcounter

To prove it is the work I have done, I have changed the README in github repository, e.g. put my steemit URL in:

Posted on Utopian.io - Rewarding Open Source Contributors