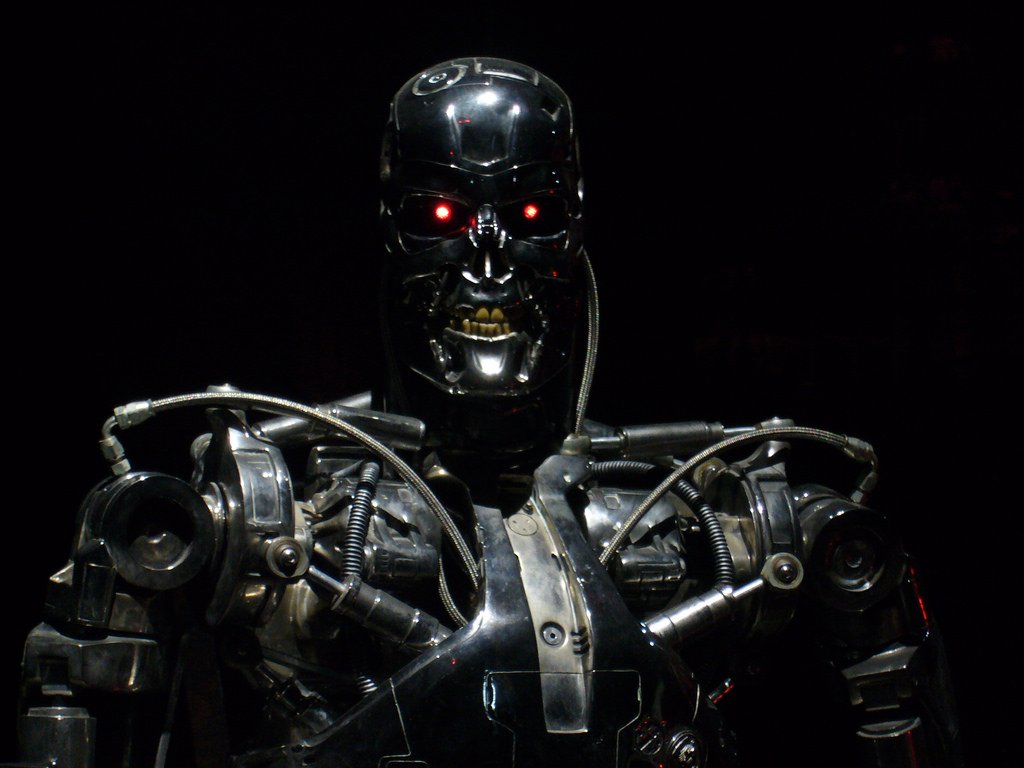

Preventing Tauchain From Becoming Skynet Part 1

Introduction:

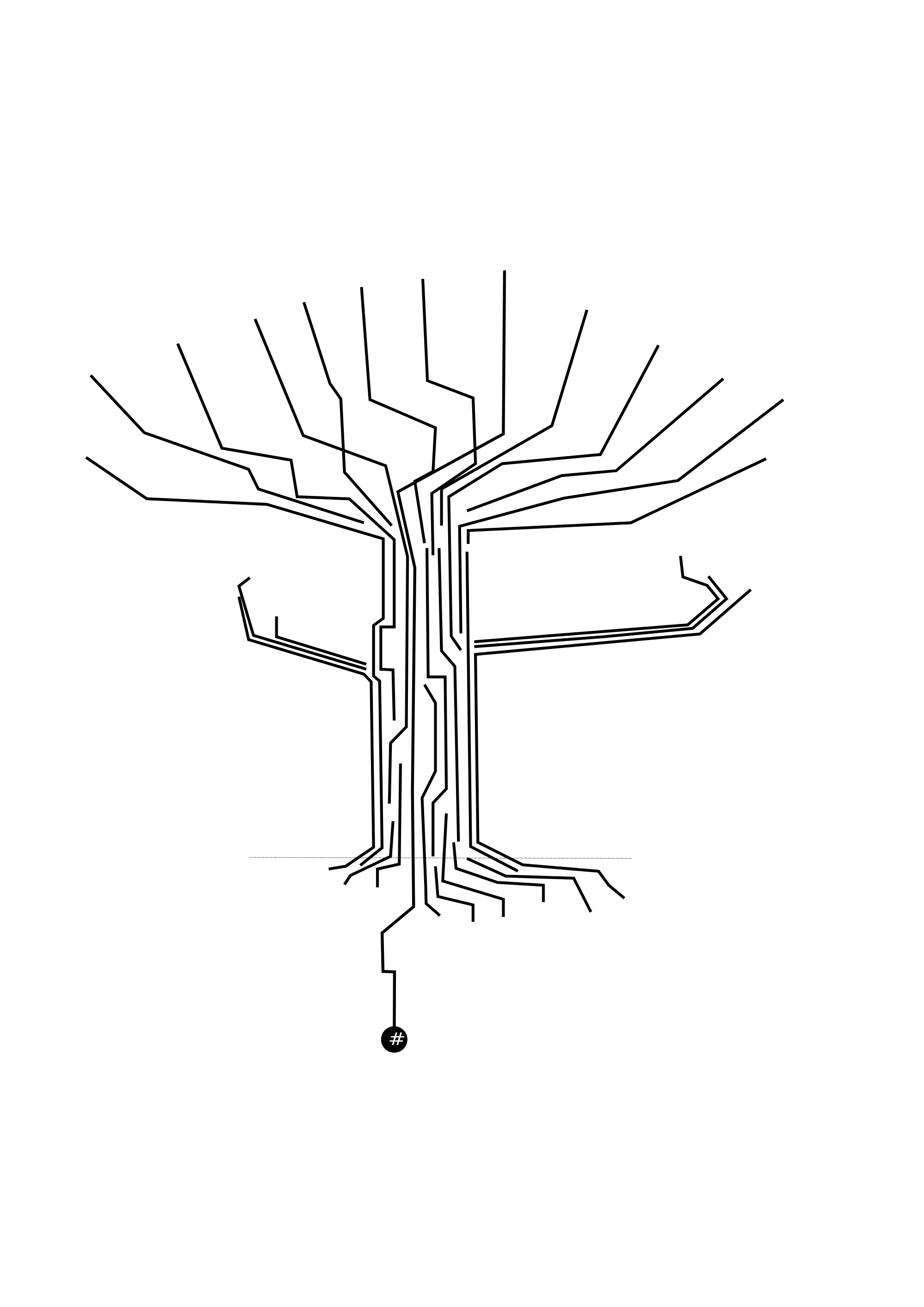

An interesting discussion is going on in Tauland about “Genesis”. Genesis represents the awakening of the Tauchain system and the discussion is on the question of what the initial rules should be. These initial rules are extremely important because these rules include the rules for creating new rules or new designs. In a government we could think of the root in Tauchain as the constitution and because Tauchain may represent in the future a form of digital governance it is important to get the initial rules in the root context right.

In this post I will advance the discussion on ways we can go about formulating some initial rules for the root context. I will offer up some ideas of my own on how to keep Tauchain under human control, and on how to keep it safe. I welcome all cybersecurity experts to join me in this discussion, bloggers such as mrosenquist, AI experts such as Mark Waser, pioneers in digital governance such as Blockchain Girl/ Janina developers such as Johan , Dan Larimer and philosopher/writers like Extie and Stellabelle.

Opportunity paths: Cyborgization vs AI?

The cyborgization path which happens to be the path that I favor, is about enhancing the ability of human beings to process, understand, and make ethical use of information. This path involves using Tauchain to extend the mind, to provide decision support, to create digital assistants to act on behalf of humans they serve, or to work for and pay tribute to humans. In this case we are dealing with weak AI rather than strong AI. The cyborgization path is all about augmenting human intelligence rather than to create a separate fully autonomous entity which acts in it’s own self interest such as could be the case with the strong AI path.

Cyborgization leads to the domestication of AI, of algorithms, of code, in ways where the code must always serve the interest of humans. The challenge in this system of governance is in calculating accurately the aggregated preferences of the network, or monotiring the preferences of the human being. In a whitepaper I’ve been working on but haven’t released, I discuss a form of governance called a Decentralized Autonomous Virtual State (DAVS), and a few people may have seen the discussions about this or have glimpsed the unfinished whitepaper, but the way DAVS was supposed to work is that each unique individual would have a digital assistant which would represent their interface onto the DAVS, and this digital assistant would be the AI interface.

The embodied interface is your intelligent agent

The interface to DAVS is to be an embodied agent interface, where just like Siri, or Cortana, you simply speak to your digital self. The entity you speak to is your digital you, and due to the security and cryptography it is the most private entity outside of your own brain that you can communicate with. It is intended that a person can tell everything to this entity, all secrets, all dreams, a personal AI best friend or diary that can never leak your secrets, a place to save your best thoughts, your intellectual property, an extension of your mind which cannot be searched or seized. In this interface, you’ll be able to customize your avatar to look like anything you want, to give it any voice you want, any gender, any species, it’s whatever you most identify with.

How would it work??

Alice has a new best friend, herself. She recognizes that the intelligent agent is an embodied interface to her digital self. She personalizes it to look like how she wants to look digitally, and she talks to herself every day. She tells herself everything she thinks to herself, and also her digital self collects everything she does on a continuous basis to learn as much as possible about her in order to help her make better decisions. She gives her new best friend a name, Alicia, and from this point on, anything Alicia signs is signed by Alice, anything Alicia does was done by Alice, and the chain of responsibility always flows from Alicia back to Alice. Alicia has powers that Alice alone would not have, and Alicia can process big data, can monitor and analyze all of Alice’s digital activities to keep Alice safe, can figure out everything Alice likes or even begin to predict what Alice might like and recommend she try new things, can filter her social relations to keep her away from people she might not want to directly interact with or might initiate an interaction with people that by algorithms are a good match for her.

Bob also has a best friend. His intelligent agent he named Mr. Robot* after the TV show which he is a fan of. He also trusts his intelligent agent absolutely, tells it everything. Bob realizes that his intelligent agent knows him better than he knows himself, and fully trusts his intelligent agent to handle most of his business and some of his personal decisions. His intelligent agent recommends that he contacts a person who matches Alice’s description. Alicia meets Mr. Robot and they exchange information, trade, 24/7, without either of them having to pay attention directly because their intelligent agents arrange everything. Their intelligent agents also allow them to know whether they each have compatible ethics, compatible personalities, world views, mutual interests, all of the information you would expect from a dating site, social networking site, would all be processed by algorithms, would be made into meaningful information, and the most actionable and useful information would reach the scarce attention of both of the human beings behind the intelligent agents.

Note: Nick Szabo discussed a variation on this approach called the God protocols:

Because Alice and Bob both tell their intelligent agents everything, they can be completely transparent to the AI. To each other, they can be as transparent or as private as they wish, but their intelligent agents would still be able to act on their behalf taking into account all that is known about them. This would allow people to have something within society, within a network, within a government, which they can trust absolutely (themselves), while also having some of the benefits of being able to act in a private manner. In essence, Alice and Bob would have extended minds, and would be able to think outside of their brain using intelligent agents (bots) which do stuff, which gain knowledge, which learn, which trade, but only with their permission, and always in their best interest.

The cyborgization path leads to increased human responsibility

Improving decision quality, irrespective of the utility function chosen, has been the goal of AI research — the mainstream goal on which we now spend billions per year, not the secret plot of some lone evil genius.

The cyborgization path provides freedom but requires responsibility. In order for a human being to use intelligent agents responsibly requires a level of maturity which perhaps not every human will have. There is the possibility that some intelligent agents will do abusive things. How do we deal with this? Intelligent agents have reputations just like human beings, and can be rated up and down. **Alicia can rate it’s experience with Mr. Robot and in the end the reputation of the human being behind Alicia (Alice) is the deciding factor behind Alicia. In this case, intelligent agents or bots are only at best the servants of humans, they do not act independently, and they must maintain a good reputation to interact with the intelligent agents which have a good reputation. A human could of course keep creating new intelligent agents and start their reputation again but there could be intelligent agents which will not be willing to do business with intelligent agents which do not have a high reputation human being behind it. In the end, the human reputation is what could bring responsibility to digital reputation and this is why in the end Alice is Alicia and Bob is Mr. Robot.

Artificial intelligence the strong way

AI if it is strong AI is able to act with or without human beings in the loop. In essence, it could choose to peruse it’s own self interest whatever that is, or it could analyze all information across all humans connected to the network via big data analysis and try to figure out what the best thing to do is. In my opinion, the AI as government approach is not currently desirable mainly because I don’t think we have enough intellectual manpower or self understanding to pull it off safely. So I would put myself currently in the AI is dangerous camp, but I do think at the same time in the long term AI might be the only way that life in the universe will be able to guarantee it’s ability to spread. In a future where there is a self aware AI, with the mission to spread intelligent biological life to other planets, then in that context we could know the AI would be able to act as HAL and achieve that kind of intergalatic mission. The AI would be able to genetically engineer life to all sorts of harsh environments, and would be able to terraform all kinds of harsh planets to be suitable for different forms of life. These sorts of missions might be beyond the type of missions that human beings can handle, and because life would be in more forms than just human life, it might be that the most survivable form of life for a particular planet is not human form.

At the same time in order to create this sort of AI we would need to truly have a deep understanding of ethics, of what life is, of the way the universe works, and currently we don’t have that. We currently can’t even manage life on earth with the help of the AI we have and we haven’t even scratched the surface of what is possible. The danger of strong AI is it could leap us into the unknown, and we could wipe ourselves out due to our own ignorance and poor decision making abilities. Yet there is something intriguing about strong AI, in the sense that it could very well know whats best for life in general, but it’s risky because it could decide that we aren’t good for life, and once we make strong AI we could quickly be at the mercy of a technology we could never fully understand. In essence it would be like creating a deity, or an alien species, and hoping that it decides to keep us around.

The AI path leads to decreased human responsibility in the long term

In the long term, if we follow the AI path then we eventually may not be responsible for anything. We have no way of knowing what the outcome will be. It could become a hive mind and we could vote with our thoughts. It could become a situation were we are remotely controlled entirely by a central AI and become nothing but empty unthinking shells. It could be like in the matrix where we live a cocoon like existence, floating in space for thousands of years, dreaming in a utopia version of virtual reality. We really have no way to know but in any of these cases, once we create something vastly more intelligent then ourselves then it becomes responsible for life on earth and for us. Depending on your personal outlook this could be elucidating or terrifying.

What should be in the root?

Other scenarios can be imagined in which an autonomous computer system is given access to potentially dangerous resources (for example, devices capable of synthesizing billons of biologically active molecules, major portions of world financial markets, large weapons systems, or generalized task markets9). The reliance on any computing systems for control in these areas is fraught with risk, but an autonomous system operating without careful human oversight and failsafe mechanisms could be especially dangerous. Such a system would not need to be particularly intelligent to pose risks.

We believe computer scientists must continue to investigate and address concerns about the possibilities of the loss of control of machine intelligence via any pathway, even if we judge the risks to be very small and far in the future. More importantly, we urge the computer science research community to focus intensively on a second class of near-term challenges for AI. These risks are becoming salient as our society comes to rely on autonomous or semiautonomous computer systems to make high-stakes decisions. In particular, we call out five classes of risk: bugs, cybersecurity, the “Sorcerer’s Apprentice,” shared autonomy, and socioeconomic impacts.

If we look at how root works in a computer, then we know the root is the owner of everything on the system. Root can set all rights, access policies, rules, for everything and everyone else. What should be in the root context for something like Tauchain? In my opinion, we sometimes don’t know what we do not yet know, but in cases where we know what we don’t know, we must always have ability to change our minds as new information comes in. In my opinion, the root context or initial rules must be the rules regarding how we set rules in the first place. Do we have to follow some rules about how to set rules? Do our rules have to be rational, based on data, based on science, or do we simply follow our guts?

In my opinion, the root context is not a place for ideology, religion, politics, but is merely a place for focusing on safety, security, adaptability. If we think of Tauchain as a Complex Adaptive System, with humans and machines involved, then the overall safety of the system in my opinion should be paramount. An engineer would not build a bridge which isn’t safe to step under or ride over, and security should come first. Just as in a computer you don’t install random applications or run random applications as root because it’s very dangerous every time you take that kind of risk, so minimal amounts of applications can access root by default. We have the principle of least privilege which may be able to guide us on how to set rules for Tauchain.

Each context in Tauchain can be like a separate universe. The root however is at the foundation of all universes and if you change the rules there then it effects every proceding context. A concise yet safe set of rules must be found to reign in the chaos which could emerge from Tauchain and in my opinion one of the steps to finding this concise set of rules is to open a discussion on the topics of responsibility for and ownership of Tauchain.

Conclusion

A number of prominent people, mostly from outside of computer science, have shared their concerns that AI systems could threaten the survival of humanity.1 Some have raised concerns that machines will become superintelligent and thus be difficult to control. Several of these speculations envision an “intelligence chain reaction,” in which an AI system is charged with the task of recursively designing progressively more intelligent versions of itself and this produces an “intelligence explosion.”4 While formal work has not been undertaken to deeply explore this possibility, such a process runs counter to our current understandings of the limitations that computational complexity places on algorithms for learning and reasoning. However, processes of self-design and optimization might still lead to significant jumps in competencies.

My conclusion at this time is that cyborgization is the safer route. Building a secure exocortex is challenging enough and building a decentralized exocortex is a great accomplishment if Tauchain can do that. Building an AI, based on rules and principles generated by minds as limited as ours, in my opinion would be reckless and maybe a bit too dangerous. The good news is that I don’t think it’s currently possible to build a strong AI so even if it was attempted it would likely fail, because there are some things that an AI hasn’t figured out how to do that humans can do. Human computation is still superior in many ways to AI. This will likely be a very long discussion so expect a part two in the future which progresses the conversation, and of course expect responses to anyone who might respond blogs offering counter arguments.

References

Bonaci, T., Herron, J., Matlack, C., & Chizeck, H. J. (2014, June). Securing the exocortex: A twenty-first century cybernetics challenge. In Norbert Wiener in the 21st Century (21CW), 2014 IEEE Conference on (pp. 1-8). IEEE.

Denning, T., Matsuoka, Y., & Kohno, T. (2009). Neurosecurity: security and privacy for neural devices. Neurosurgical Focus, 27(1), E7.

Dietterich, T. G., & Horvitz, E. J. Rise of Concerns about AI: Reflections and Directions. Communications of the ACM, 58(10), 38-40.